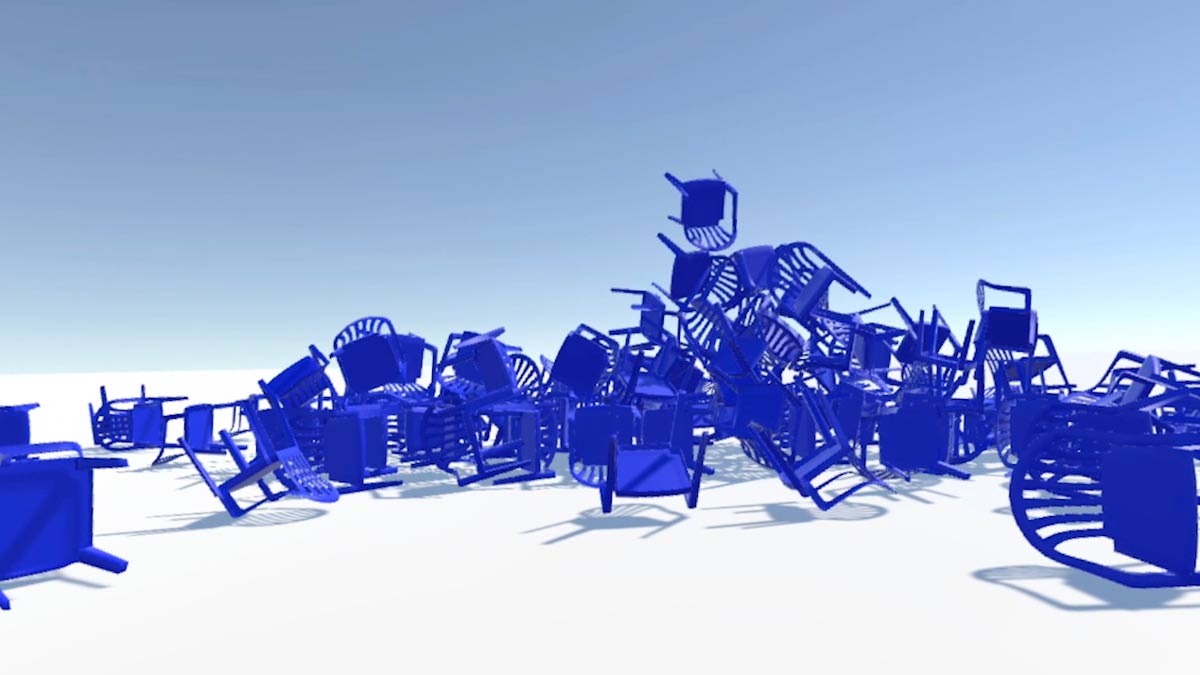

VR sketch: The virtual reality environment [tentatively referred to as ‘vox’] consists of an endless plane under a cloudless sky. The generative mechanics of the environment operates under a set of rules as follows: [1] when recognized by the environment’s artificial intelligence as part of its language set, nouns spoken by the user will result in the signified object being produced within the virtual space, [2] these objects will be modified in their quantity, properties and behavior based on the recognized spoken adjectives and verbs that precede or follow the signifier noun, [3] the character of the object’s propagation within the environment (force, direction, and so forth) will be determined by the position and movement of body at the time the signifier noun was spoken, [4] objects that enter the environment persist within that space and slowly change their properties and behavior over time. As such, a participant may speak and have their words actively produce an accumulated change within their environment through an interpretation of their speech according to the rules outlined above and the limitations of the artificial intelligence mediating the exchange.

While the project is being developed, I generally keep a personal video log of things as they progress (1-3 are included below). It is in part to mark key points of progress and make sure I can return to decisions that may impact the project much later on, but it is mostly to try and capture the process of ‘working through.’

_1 Voice Recognition [view]

_2 Color & Size [view]

_3 Gaze Instantiation [view]